In a digital age where artificial intelligence shapes much of our online experience, the humans behind the curtain often remain enigmatic figures. Enter Jack Krawczyk, the man steering the ship for Google’s Gemini, a platform that’s recently found itself in hot water.

But why, you might wonder, is this tech lead’s name suddenly on everyone’s lips?

Well, strap in because we’re about to embark on a journey through the labyrinth of social media uproar, allegations of AI bias, and the resurfacing of tweets that have many raising their eyebrows.

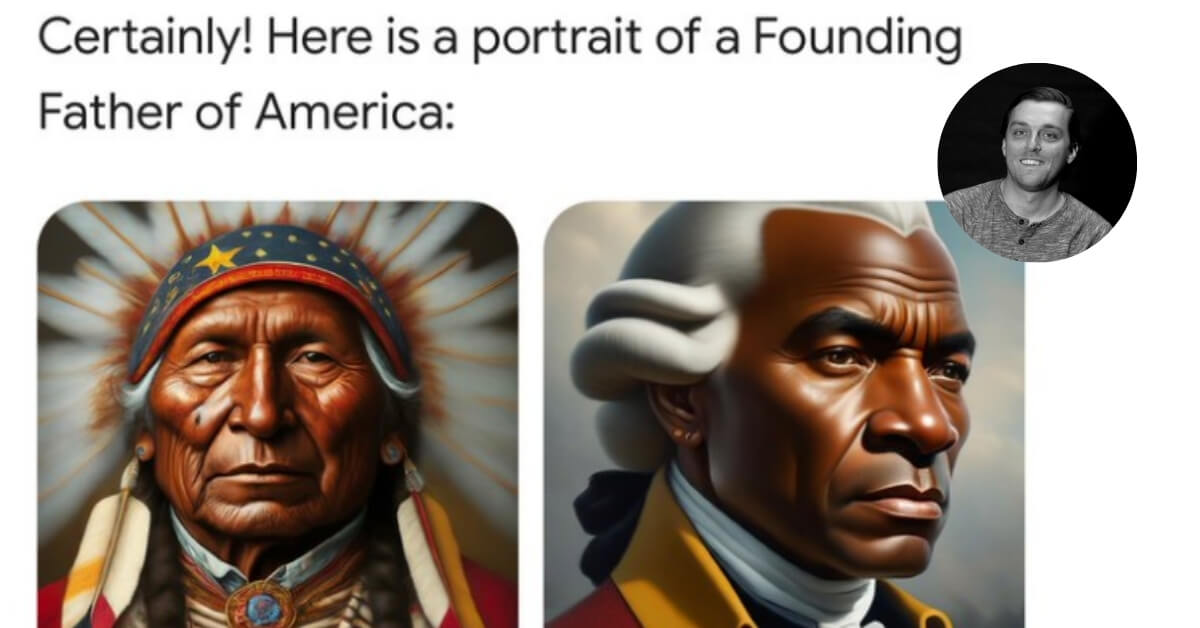

At the heart of this storm is Google’s Gemini, an AI designed to generate images from chat responses that have come under fire for allegedly coloring history with a modern brush of diversity. Google Gemini AI seems to be racist towards white people at the moment as it clearly generates images of notable figures as black and refuses to learn.

The plot thickens as Krawczyk’s past tweets, deemed by some as “racist,” have resurfaced, sparking a debate that stretches far beyond the realms of technology and into the contentious arena of social politics. But before we dive into the deep end, let’s take a moment to tease apart the layers of this unfolding drama.

Who Is Jack Krawczyk, and What’s the Fuss About?

Jack Krawczyk, currently serving as the Senior Director of Product for Google’s Gemini, has found himself at the center of a heated debate. This controversy stems from allegations of AI bias within Gemini’s outputs and Krawczyk’s own past tweets, which have been criticized for their strong political and social stances.

At the crux of this controversy is Gemini, an AI tool that’s been accused of rewriting history with an obvious fake diversity brush.

Allegedly, when prompted to generate images of historical figures or scenarios, the AI has produced results that diverge significantly from historical accuracy, prompting an outcry from various quarters.

The tool’s apparent misstep in depicting characters like the founding fathers or Vikings as people of color has sparked a fierce debate over the role of AI in reflecting diversity versus maintaining historical accuracy.

Jack Krawczyk, the man behind Gemini, has seen a barrage of criticism directed at him, particularly regarding tweets from years past.

In one tweet from April 13, 2018, Krawczyk is quoted as saying,

“White privilege is fcking real. Don’t be an ashole and act guilty about it – do your part in recognizing bias at all levels of egregious.”

In fact, on June 22, 2018, he wrote:

“This is America where racism is the #1 value our populace seeks to uphold above all…”

A statement that, while reflecting a personal stance on social issues, has inflamed opinions on whether such views influence the AI’s output. His comments have been met with backlash, with some suggesting that his perspectives are coloring Gemini’s algorithms, leading to outputs that prioritize diversity over accuracy.

As for Google, Their response to the uproar has been to momentarily disable Gemini’s image generation feature, stating that the company is working on addressing the issues raised. Twitter says it’s not “Goog enough.”

Krawczyk himself has emphasized that Gemini’s image generation capabilities are designed to reflect the global user base, acknowledging, however, that the tool was “missing the mark” in its current form.

Critics, including influencers and Twitter users, have not held back, with some calling Krawczyk’s tweets an indication of a “woke, race-obsessed” mindset that they argue is not suitable for someone in his position.

The discourse has even caught the attention of high-profile individuals like Elon Musk, who didn’t mince words in his criticism.

Yet, it’s essential to understand the broader context. Google Gemini’s intention, according to Krawczyk, is to generate a wide range of people in its outputs, aiming to be inclusive of the global audience it serves. The question arises, then, about the fine line between promoting diversity and adhering to historical fidelity in AI-generated content.

As we peel back the layers of this complex issue, several questions emerge. Is the criticism of Krawczyk and Gemini a reflection of a broader discomfort with AI’s role in shaping our understanding of history and diversity? Or is it indicative of a need for clearer guidelines on how AI should navigate the sensitive terrain of social and historical representation?

The debate is far from over, and as we continue to grapple with these questions, one thing is clear: The intersection of technology, history, and social politics is a minefield that requires careful navigation.

What do you think? Is there a balance to be struck between diversity and historical accuracy in AI-generated content?